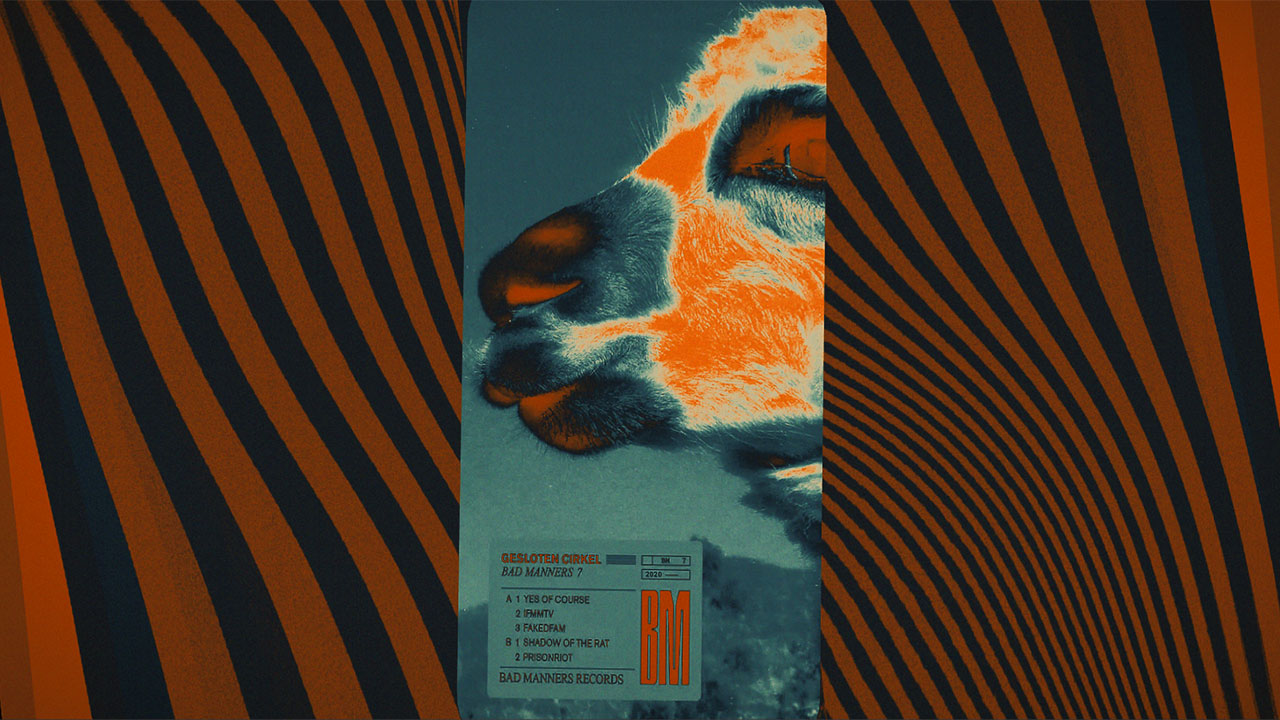

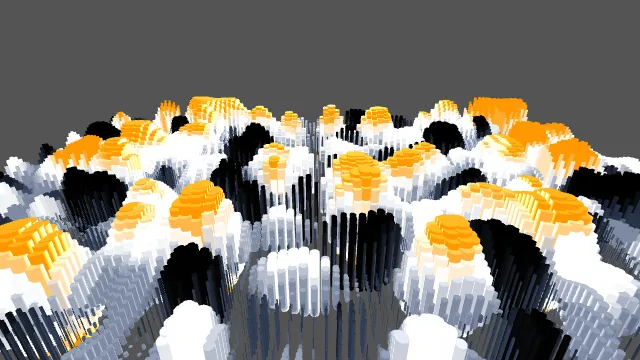

Bad Manners Records BM7

Gesloten Cirkel

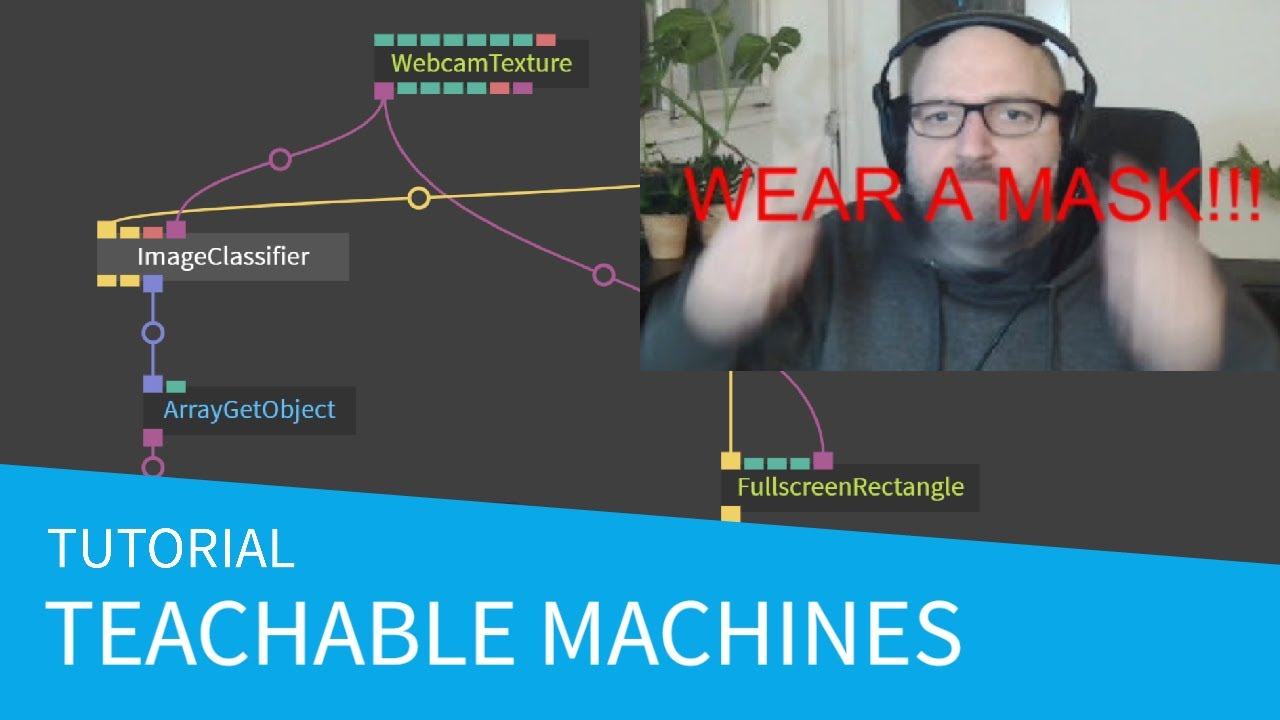

music video made by photoevaporation

We are starting a new cables jam that you can participate in, right now.

Hey,

These Ops give you some easy ways to manipulate your instanced meshes without re-uploading everything to the GPU every frame.

We are often asked how putting cables patches on your own website works and sometimes we cannot help because every web-hoster does stuff differently.

When working with bigger datasets, netsted JSON or remote APIs finding the datapoints that really matter to you used to be really tedious, spreading over several ops, up until now.

Introducing a new handy little tool to check colors of your rendering.

We updated the ImageCompose and DrawImage ops to finally support transparency the way you would expect it.

Also worth mentioning:

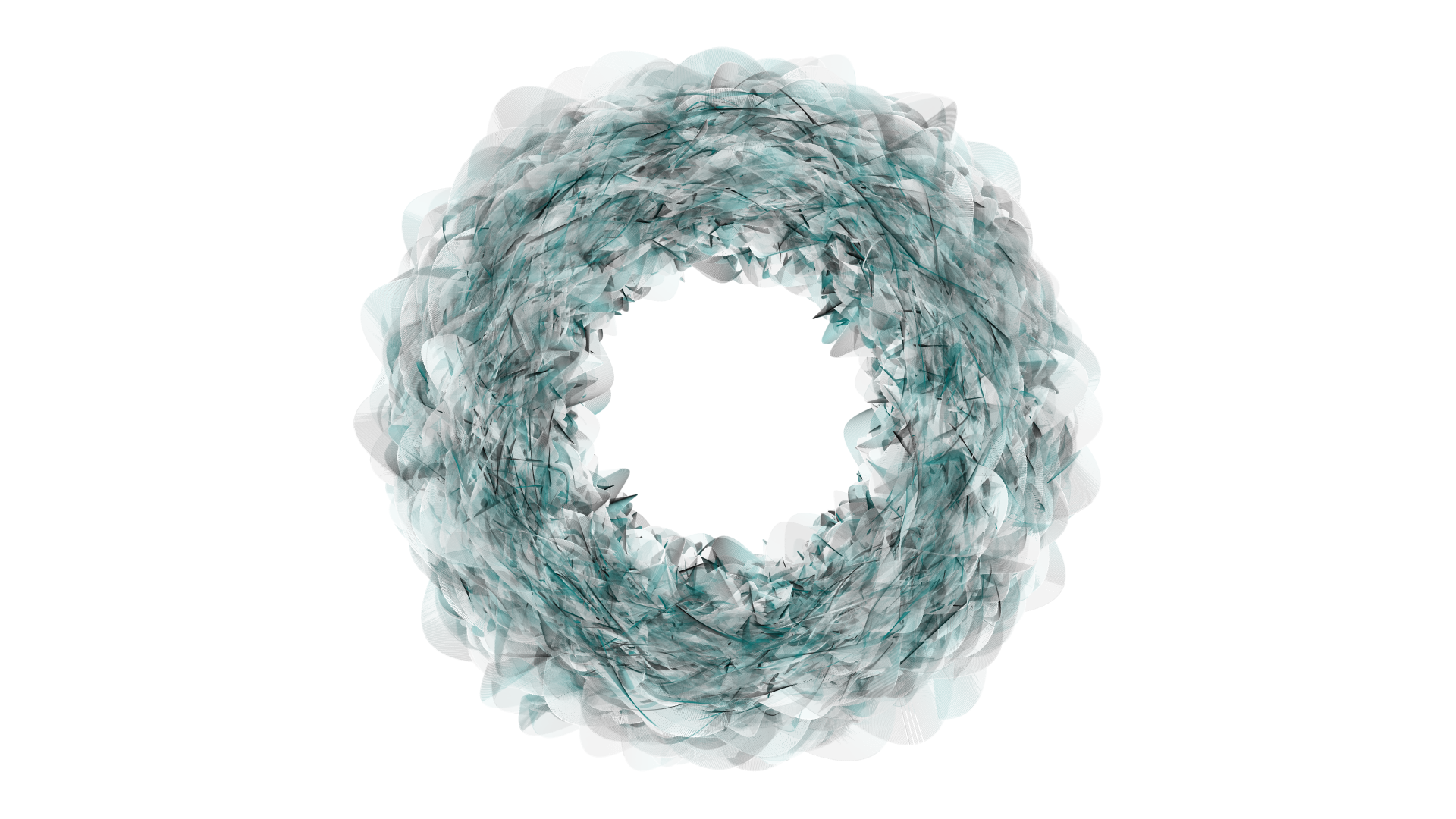

The last cables live stream was a copy cat edition inspired by the digital artist Anders Hoff, otherwise known as Inconvergent

I've always loved following his work and he's been a great source of inspiration to me throughout the years.

I'd highly recommend checking out his web page as there's a lot of beautiful digital artwork to be found over there.

This blog is going to be a bit longer and more in depth as I'd like to highlight some things I learnt during and after the stream.

I hope you'll enjoy it.

A lot of digital art pieces made with processing and custom software is based upon having a animated system in place and basically not refreshing the canvas each frame.

This means that more and more information gets added to the picture.

A few generative rules can generate a huge amount of output.

This process isn't actually completely real time, due to the fact it takes time to generate the image 🙂

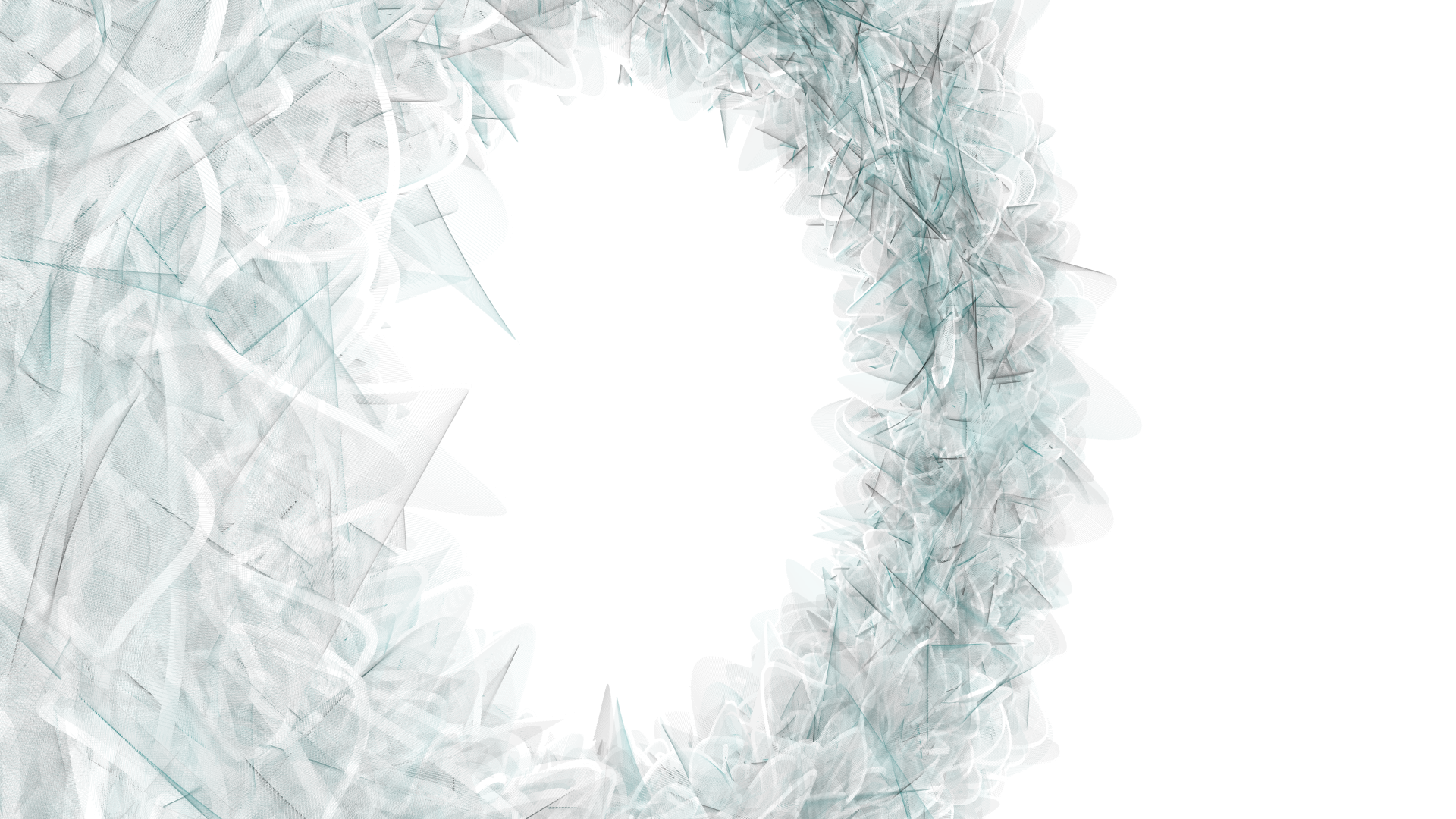

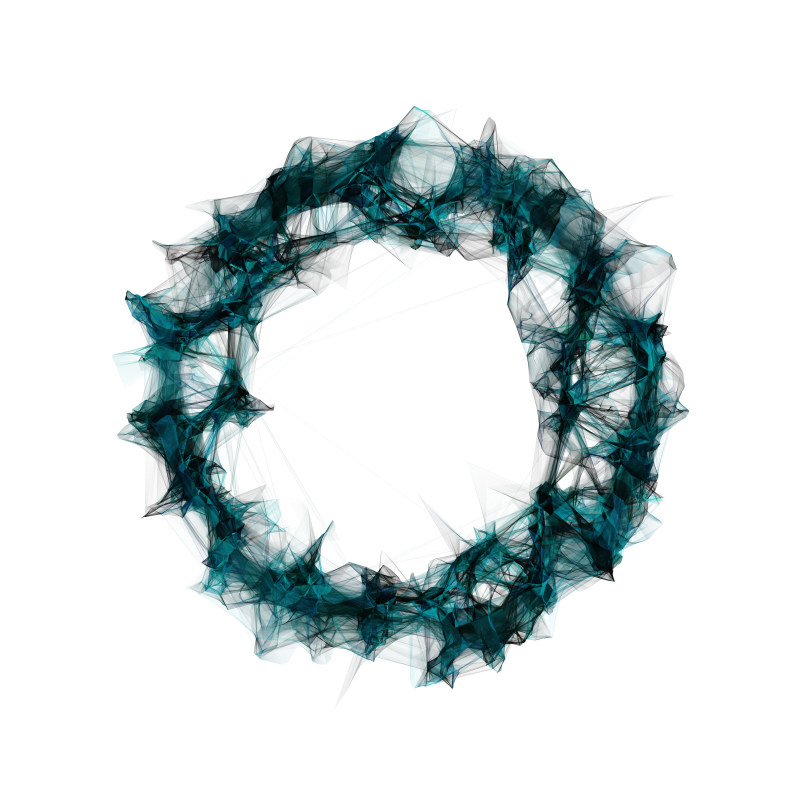

I was trying recreate this look from Inconvergents DOF tests.

It worked out pretty good 🙂

This patch shows what happens when we don't refresh the canvas and just animate a circle.

The color and the frequency is all just tied into the timer.

It doesn't look so great but it gets across how the technique works.

The 3D scene has to be rendered into a texture. We then disable the clear option on the Render2texture op.

Every time we reset we make sure that the clear color is set for one frame, this wipes everything that was already there.

Setting the alpha to a low number and letting the patch run adds more and more detail to the canvas.

That's the entire technique in a nutshell.

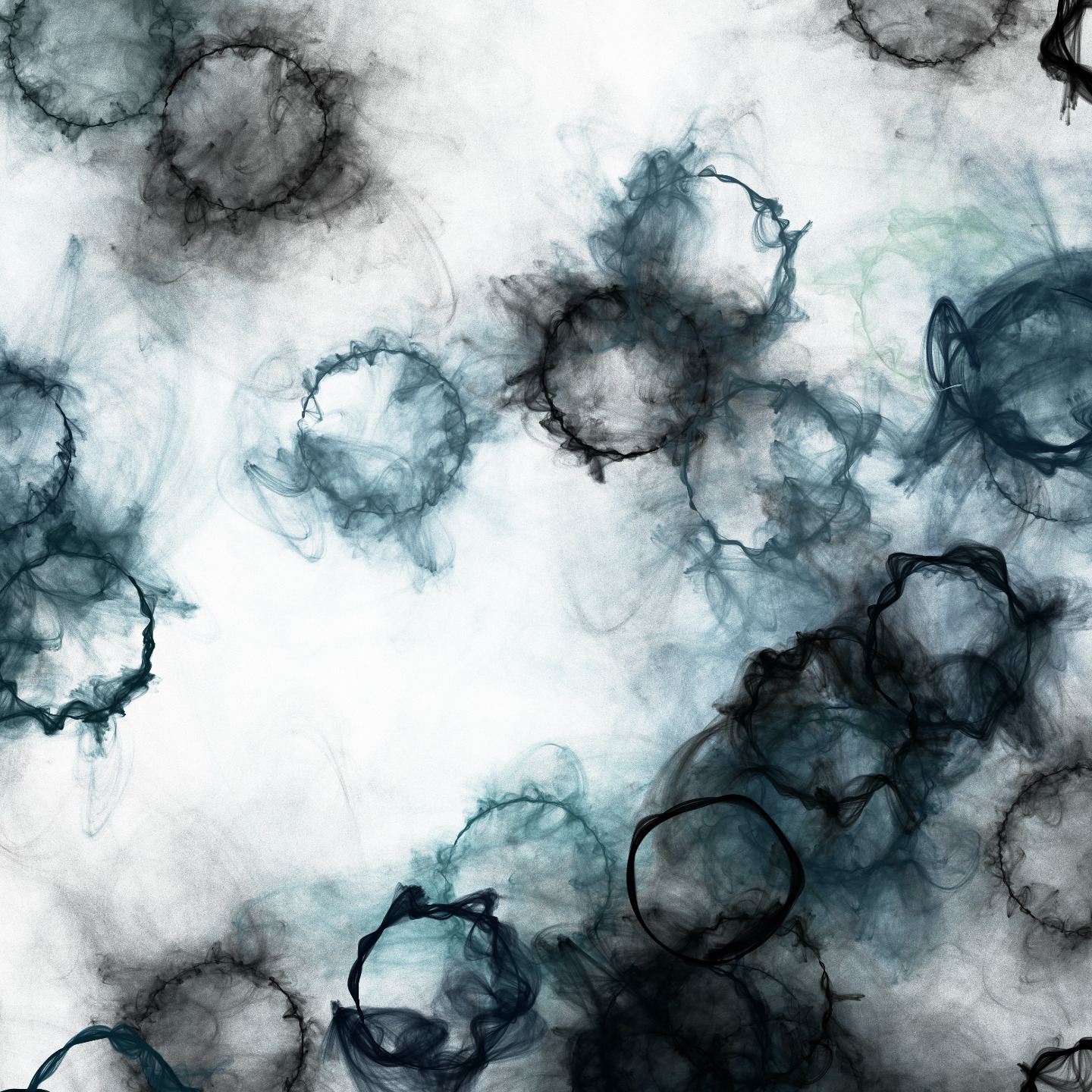

It's really almost impossible to recreate a generative art piece so this stream focused on being inspired by the piece itself.

The original from inconvergent below